In an agile context, we use metrics to set performance goals, measure current conditions, define small improvement experiments and measure the effectiveness of the experiments in order to inspect and adapt goals and determine the next steps.

Metrics need to be chosen with care because when poorly chosen, they create an illusion of control. The metric data might deliver green dashboards while in reality, our organisation is not performing very well. This article aims to give you a couple of tips to become more aware of your metrics.

In summary, some tips:

- Ensure your metrics are connected to your vision and mission

- Take complexity into account (do not assume linear cause and effect)

- Embed the metrics in the work so they do not become a separate goal

- Measure “outside-in”

- Focus on outcomes (impact) rather than output

- Build up and revise your metrics as you go

Connect metrics to vision and mission

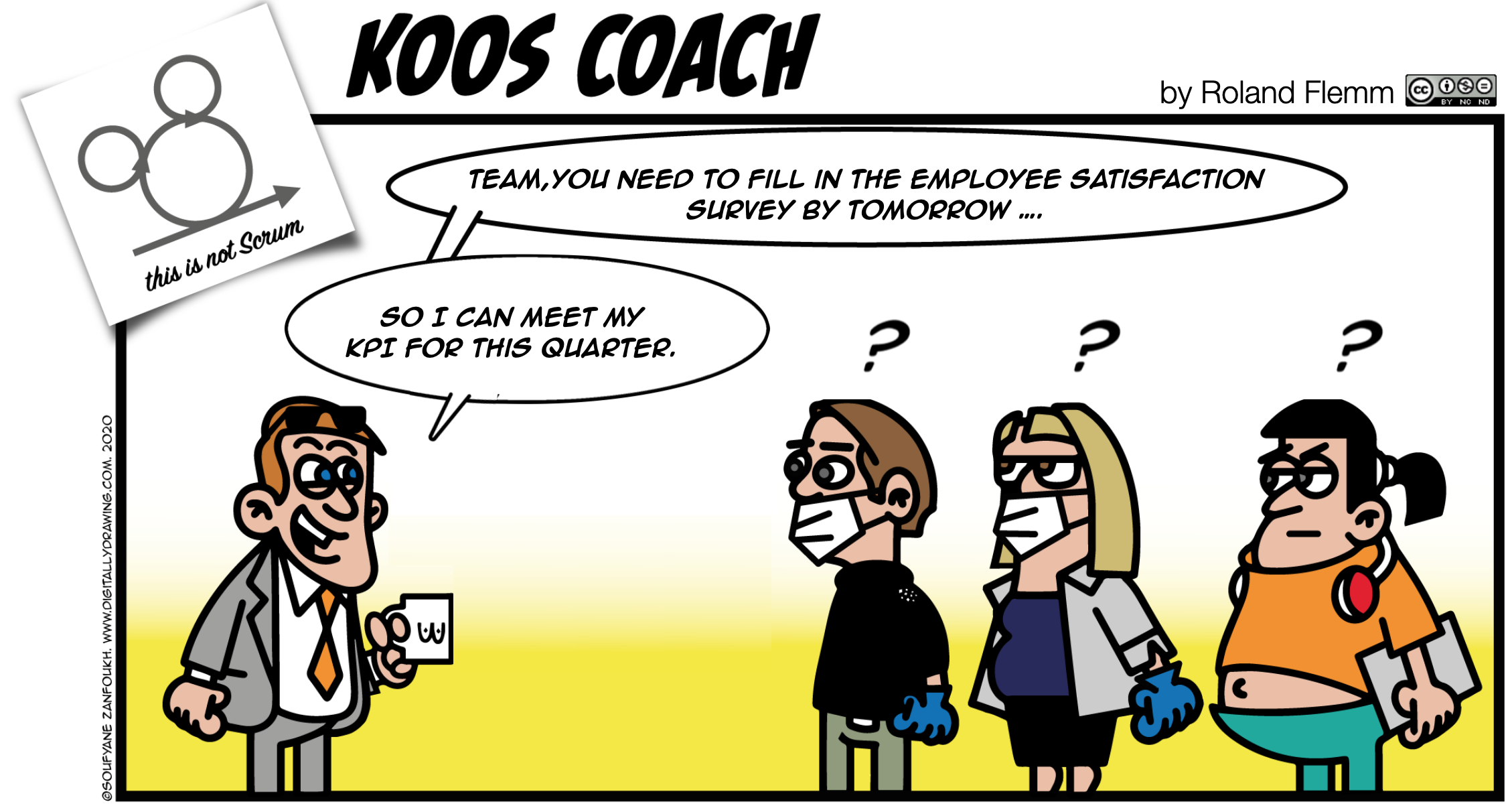

Our vision and mission encapsulate the goal we want to achieve. If we want to evaluate how well we are executing our strategy to accomplish that goal, it makes sense to define metrics that help us evaluate just that. Coupling KPI’s or metrics directly to strategic goals is often forgotten. I see many metrics being applied because they are available or industry standards, but not because they really match with the (Product) Goal.

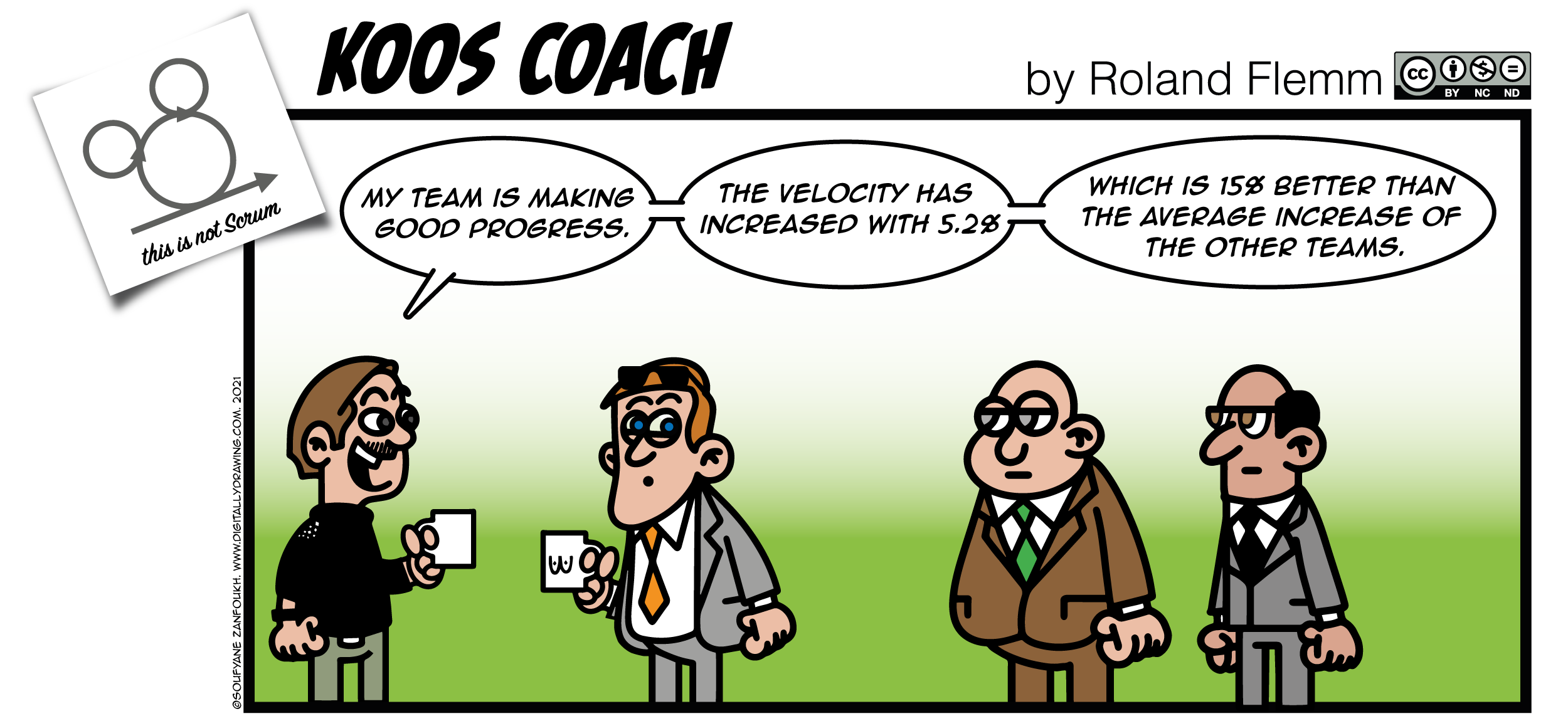

If we collect metrics that serve as a KPI to teams, we need to be aware we are not supplying the teams goals other than our main goal (delivering customer value). Giving more goals than one decreases focus which has a negative impact on our performance. The Product Goal and the Sprint Goal is what we want the teams to focus on. To prevent creating multiple (contradicting) goals, try to express KPI’s through the Product Goal and Sprint Goal.

Organisations and processes are complex adaptive systems

Choosing proper metrics is not so easy because organisations are complex adaptive systems. It is very difficult, if not impossible to measure cause and effect behaviour because in most cases there is no linear relationship. Metrics aim at influencing behaviour and therefore have a tight relationship with an organisation's culture.

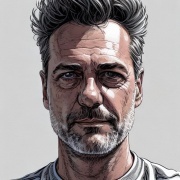

The results of our measurements can change behaviour. But simply the act of implementing metrics influences the system. This is known as the observer effect: Observing affects what is being observed. This effect increases complexity through undesired and unforeseen behavioural side-effects. For example, knowing that velocity is monitored influences the estimation of story points by the team.

It is easy to create dashboards filled with metrics. Applying metrics will increase the complex behaviour of our system and the dashboards are more likely to blur than to enlighten our vision. Limit the number of metrics and choose with care.

Embed data collection in the working process

The preferred way to collect data is without bothering anyone. The number of bugs or time spent on a webpage can be registered without anyone doing an additional effort of registering the data. As opposed to asking an employee to register certain data in a spreadsheet during the day.

If the data collection is not embedded in the system, it will become unreliable: Your data will be incomplete because not all data is captured. Also, the data gets registered incorrectly because the person entering the data does not understand the purpose of the metric. And adding a data collection task to the work reduces focus.

Measuring outside-in increases transparency

Some random examples of common metrics that you probably are familiar with:

- Employee happiness metric

- Agile maturity score

- Team velocity

- Number of bugs

- Lines of committed code

In the above examples, the data is collected inside the system. “System” in this context is all processes and people involved in creating and managing a service or a product. In most cases, the system is everybody in your department or in your company. Measuring inside-out means the metric output is created and delivered by actors inside the system. The problem with inside-out metrics is that people inside the system will compromise the metrics sooner or later. Especially if there is some kind of (indirect) relationship between their performance evaluation and the metric. This is human nature and inevitable. Measuring outside-in means we probe actors outside our system. These actors have no concern with the internal system dynamics. We know they cannot compromise the data.

Consider a company where the QA’s performance is measured by the number of bugs they report. The QA’s can easily manipulate their performance reports by registering more bugs. This might seem like a silly example, but I have seen it happen many times, just like rewarding developers by the number of committed lines of code. Although these metrics are implemented with good intentions, they generally do not create more customer value.

If we collect data outside our system, we rule out the effect of data being compromised. We know for sure that we deliver good service if the customer recommends us to friends. The NPS score is the industry standard for this. However, the NPS is not extremely accurate as it measures intent and not real behaviour. We would better measure recurring customer frequency, or monitor recommendations for our service on social media. These metrics are external to our system, they measure voluntary customer behaviour. The downside of these trends is that they are lagging: it takes a while before we see the results (in the form of trends).

In many companies, HR runs recurring surveys to measure employee happiness. “On a scale from 1 to 10, how happy are you in your team?” This data can be helpful. However, if we aim to measure employee happiness, measuring the number of employees leaving per month provides a more objective view of employee happiness. The downside is that this result does not tell us a lot about the reasons for leaving. Combining these outcomes with employee survey outputs gives us cues on what to research to discover employee happiness improvements.

Measuring inside the system can be very valuable if done right. There is a lot of data we would like to have fast (leading data) that resides inside the system. The trick here is to choose a metric that will produce desired behaviour and that is likely to have negative side effects. If we want to deliver faster to the customer, we could monitor release frequency. Even if this metric would be perceived as a team performance metric, it will push teams to invest in speeding up their delivery process which is generally a good thing.

Prefer outcomes over output

Metrics are numbers, output of a process. “The number of bugs reported today is 25”. What does this number tell us about software quality? It depends on what is considered to be a bug, the number that was reported yesterday, who is reading that number and many other factors we don’t know. Output only becomes valuable when it is placed in a context so that the data can be interpreted. Maybe the goal of measuring the number of bugs was to get information on the quality of our product. In a different context, the number of bugs could be related to the quality of a tester.

To monitor the performance of QA’s, we should ask ourselves: “What is the desired behaviour I would like to observe?” Followed by: “How can I measure that?” Coupling team rewarding to customer satisfaction is a sensible approach to achieve this.

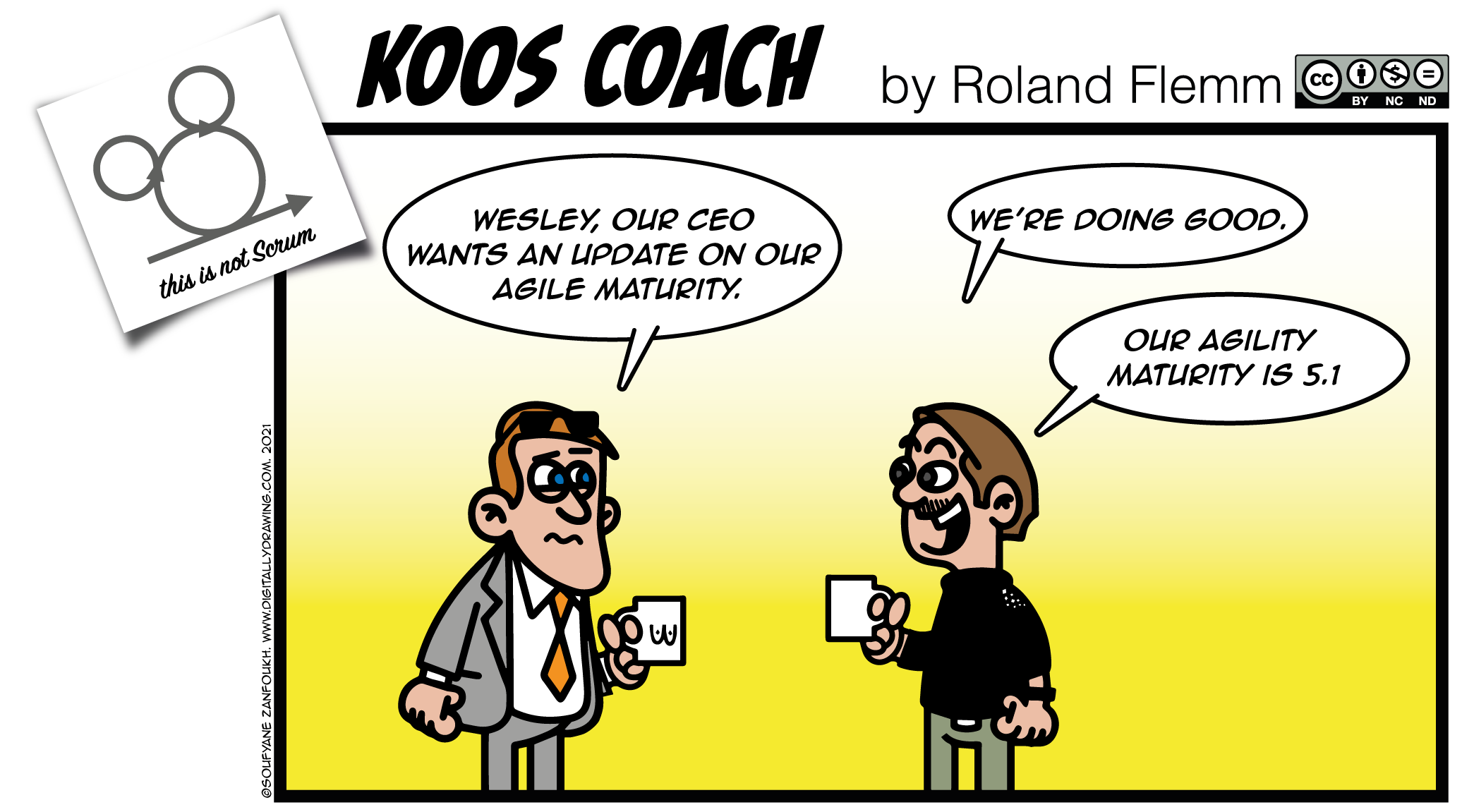

We all know the (Spotify) agile maturity scan. The “agile maturity” metric tries to measure how agile the team is. When done right, it measures behaviour, which is a good thing. Scrum Masters collect data by scoring a series of questions. Every quarter, teams spend a reasonable amount of time discussing behaviour and challenges. The value of the maturity scan is in generating reflective conversations. Sadly, the results are mostly reported as numbers to management to rate the success of their agile transformation program. This is where the maturity scan loses its value and becomes counter-productive: Teams fill in the scan to satisfy the system, not to do a self-assessment.

Metrics change too

The PO will craft a great Product Goal and choose metrics to measure progress toward that goal. The PO should incorporate that metric in every Sprint Review. But there is also a need to measure the performance of our development efforts. Scrum will tell us what the next improvement is to focus on. Measuring the effect of that improvement is our next performance metric.

And oh, don’t be afraid to remove metrics. Many metrics lose value over time because we get better at what we do.

In summary

Choose metrics that measure your goal. Embed metrics in the work to keep focus on the work. Prefer outcomes over outputs and make sensible combinations. Favour less metrics, beware of applying metrics “just because they are available”. Let your Retrospectives guide you and clean up your dashboard every once in a while.

For more information on metrics, consider reading the Evidence Based Management guide by scrum.org